Tapping a pen, shaking a leg, twirling hair—we have all been in a classroom, meeting, or a public place where we find ourselves or someone else engaging in repetitive behavior—a type of self-stimulatory movement also known as stimming. For people with autism, stimming can include movements like flicking fingers or rocking back and forth. These actions are believed to be used to deal with overwhelming sensory environments, regulate emotions, or express joy, but stimming is not well understood. And while the behaviors are mostly harmless and, in some instances, beneficial, stimming can also escalate and cause serious injuries. However, it is a difficult behavior to study, especially when the behaviors involve self-harm.

“The more we learn about how benign active tactile sensations like stimming are processed, the closer we will be to understanding self-injurious behavior,” said Emily Isenstein, PhD (’24), LEND Trainee, Medical Scientist Training Program trainee at the University of Rochester School of Medicine and Dentistry, and first author of the study in NeuroImage that provides new clues into how people with autism process touch. “By better understanding how the brain processes different types of touch, we hope to someday work toward more healthy outlets of expression to avoid self-injury.”

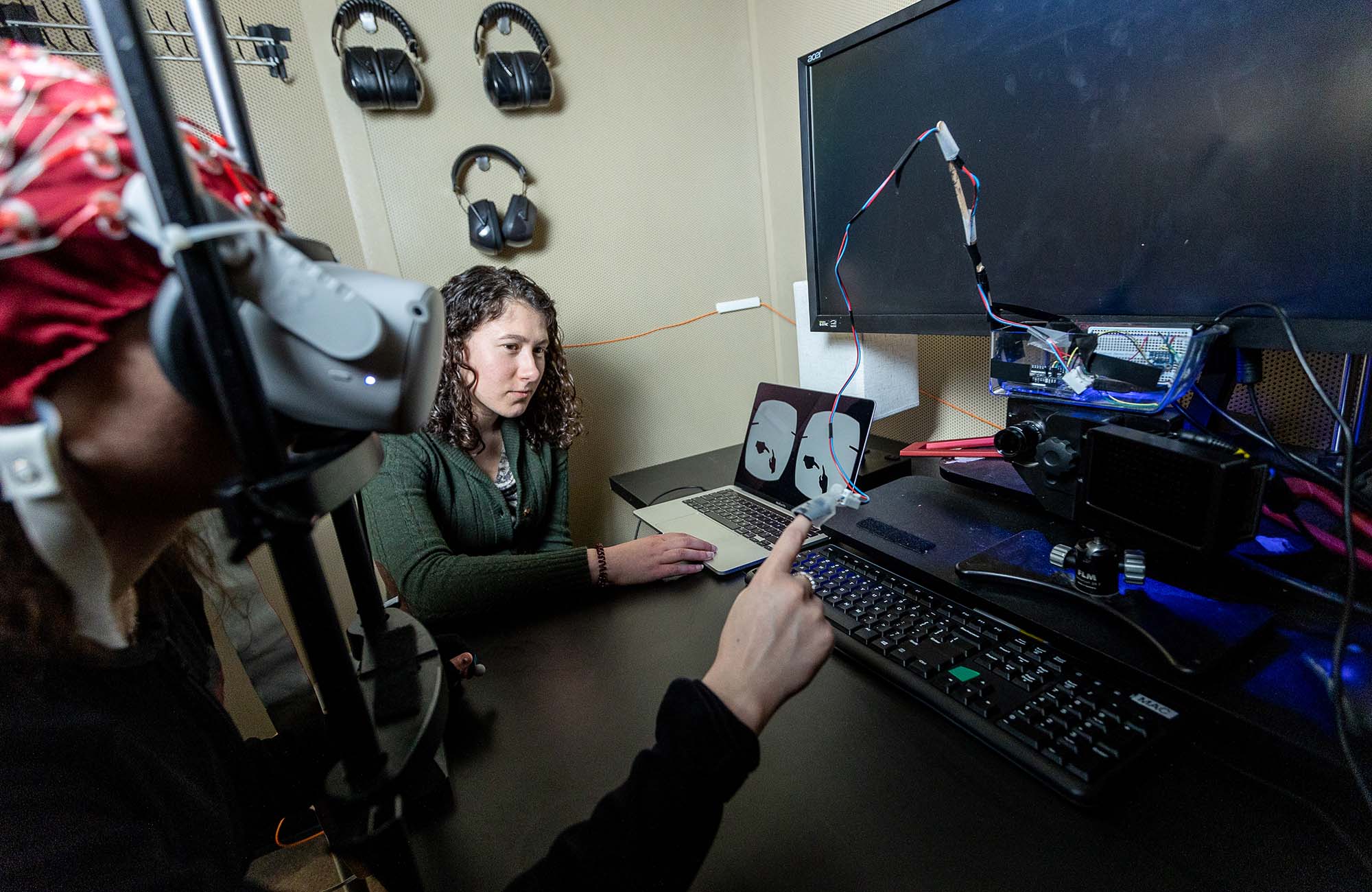

Researchers used several technological methods to create a more realistic sensory experience for active touch—reaching and touching—and passive touch—being touched. A virtual reality headset simulated visual movement, while a vibrating finger clip—or vibrotactile disc—replicated touch. Using EEG, researchers measured the brain responses of 30 neurotypical adults and 29 adults with autism as they participated in active and passive touch tasks. To measure active touch, participants reached out to touch a virtual hand, giving them control over when they would feel the vibrations. To measure passive touch, a virtual hand reached out to touch them. The participant felt vibrations when the two hands “touched," simulating physical contact. As expected, the researchers found that the neurotypical group had a smaller response in a brain signal to active touch when compared to passive touch, evidence that the brain does not use as many resources when it controls touch and knows what to expect.

However, the group with autism showed little variation in brain response to the two types of touch. Both were more in line with the neurotypical group's brain response to passive touch, suggesting that in autism, the brain may have trouble distinguishing between active and passive inputs. “This could be a clue that people with autism may have difficulty predicting the consequences of their actions, which could be what leads to repetitive behavior or stimming,” said Isenstein.

It was a surprising finding, particularly in adults. John Foxe, PhD, director of the Golisano Intellectual and Developmental Disabilities Institute at the University of Rochester and co-senior author of the study, remarked that this may indicate the difference in children with autism could be greater than their neurotypical counterparts. “Many adults with autism have learned how to interact effectively with their environment, so the fact that we’re still finding differences in brain processing for active touch leads me to think this response may be more severe in kids, and that’s what we also need to understand.”

Location, Location, Location: Student Pools University Resources to Advance Autism Research

“I went into my PhD with the idea to have one big project that would use resources and expertise from both labs,” said Isenstein, who was co-mentored by Foxe and Duje Tadin, PhD, professor of Brain and Cognitive Sciences and co-senior author on the NeuroImage study. “I knew pretty early on that I wanted to study something that would help us understand stimming, but it’s a pretty complicated concept. Everyone has been incredibly supportive of me building my ‘dream team’ of collaborations to make this experiment possible.”

Isenstein employed the expertise in EEG and touch from the Frederick J. and Marion A. Schindler Cognitive Neurophysiology Lab for her study. While the means to study proprioception—or sense of self-movement—and virtual reality came from the Tadin Lab.

“Humans are not stationary creatures, and things that happen when you are sitting still getting an EEG do not really mimic how you interact with things in real life,” said Isenstein. “It [virtual reality] was a really exciting way to study proprioception and body movements.”

“It was easy to see the importance of the project Emily proposed. It was also clear how difficult it would be to conduct a rigorous EEG study of active and passive touch in autism,” said Tadin. “Emily achieved this goal by spearheading the first collaboration between John’s lab and mine, leveraging the resources and expertise from the Medical Center and the University, including the Mary Ann Mavrinac Studio X. This is a wonderful example of the boundless possibilities in Rochester for a motivated student who takes advantage of the robust research resources that are available here.”

Using EEG, vibrotactile inputs, and virtual reality in research is not novel, but creating a study where the three technologies work in tandem, is a newer approach. Isenstein also turned to the Mary Ann Mavrinac Studio X at the University of Rochester to brainstorm how to get these technologies to work together. Studio X is an extended reality hub on campus that offers workshops, equipment rentals, and provides a multidisciplinary space for researchers and creators to collaborate on projects. “It really is an incredible resource for researchers trying to incorporate virtual reality into their research,” said Isenstein.

The setup went through several trial periods to figure out how to do it without compromising data quality. “It was really exciting when I could finally get to the point to use these technologies together,” said Isenstein. “We ended up mounting the VR headset onto a frame that people could just lean into and then their hands were free to move around and do whatever. And their head was still very stationary. This setup allowed the team to simulate a realistic sensory environment without compromising on data quality.”

Researchers aim to apply these methods to more complex movements in people with autism to understand stimming better.

Additional authors include Ed Freedman, PhD, Grace Rico, and Zakilya Brown of the University of Rochester. This research was supported by the Schmitt Program in Integrative Neuroscience (SPIN) through the Del Monte Institute for Neuroscience pilot program, the Eunice Kennedy Shriver National Institute of Child Health and Human Development, the National Eye Institute, the National Institute on Aging, and the National Institutes of Health.

View Original Article